This is a summary with some bullets and some parts of text extracted from AWS papers and from come online courses like cloud guru, linux academy, udemy. To study concepts about Cost Management.

Cloud computing helps businesses in the following ways:

- Reduces costs and complexity

- Adjusts capacity on demand

- Reduces time to market

- Increases opportunities for innovation

- Enhances security

When you decouple from the data center, you’ll be able to:

- Decrease your TCO: Eliminate many of the costs related to building and maintaining a data center or colocation deployment. Pay for only the resources you consume.

- Reduce complexity: Reduce the need to manage infrastructure, investigate licensing issues, or divert resources.

- Adjust capacity on the fly: Add or reduce resources, depending on seasonal business needs, using infrastructure that is secure, reliable, and broadly accessible.

- Reduce time to market: Design and develop new IT projects faster.

- Deploy quickly, even worldwide: Deploy applications across multiple

- geographic areas.

- Increase efficiencies: Use automation to reduce or eliminate IT management activities that waste time and resources.

- Innovate more: Spin up a new server and try out an idea. Each project moves through the funnel more quickly because the cloud makes it faster (and cheaper) to deploy, test, and launch new products and services.

- Spend your resources strategically: Switch to a DevOps model to free your IT staff from operations and maintenance that can be handled by the cloud services provider.

- Enhance security: Spend less time conducting security reviews on infrastructure. Mature cloud providers have teams of people who focus on security, offering best practices to ensure you’re compliant, no matter what your industry.

AWS Economics

The AWS infrastructure serves more than one million active customers in over 190 countries and offers the following benefits to its users:

- Global operations

- High availability

- Low costs due to high volume

- Only pay for what you use

- Economies of scale

- Financial flexibility

Capital Expenses (CapEx)

Money to spent to long-term assets like property, buildings and equipment.

Operational Expenses (OpEx)

Money spent for on-going cost for running the business.

Usually considered variable expenses.

Total Cost of Ownership (TCO)

A comprehensive look at the entire cost model of a given decision or option, often including both hard cost and soft costs.

Return of Investments (ROI)

The amount an entity can expect to receive back within a certain amount of time given an investment.

TCO vs ROI

- Many times, organizations don’t have a good handle on their full on-prem data center cost.

- Soft cost are rarely tracked or even understood as a tangible expense.

- Learning curve will be very different from person to person.

- Business plan usually include many assumptions which in turn require support organizations to create derivate assumptions, sometimes layers deep.

Cost Optimization strategy.

- Appropriate Provisioning

- Provision the resources you need and nothing more

- Consolidate where possible for greater density and lower complexity

- CloudWatch can help by monitoring utilization

- Right Sizing

- Using lowest cost resource that still meets the technical specifications

- Architecting for most consistence use of resources is best versus spikes and valleys.

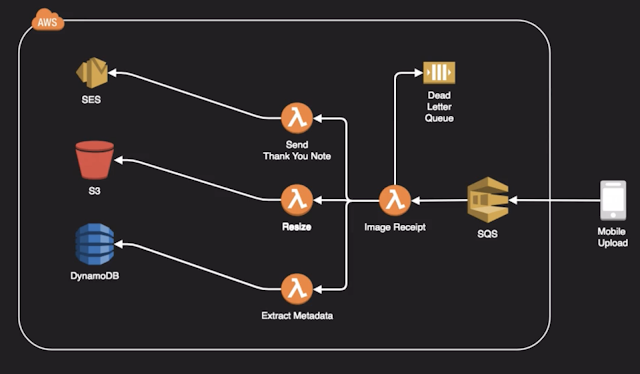

- Loosely coupled architectures using SNS,SQS, Lambda and DynamoDB can smooth demand and create more predictability and consistency.

- Purchase Options

- For permanent applications to needs, Reserved instances provide the best cost advantages.

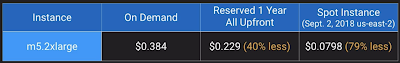

- Spot instances are best for temporary horizontal scaling.

- EC2 Fleet lets you define target mix On-Demand, Reserved and Spot instances

- Geographic Selection

- AWS pricing can vary from region to region

- Consider potential saving by locating resources in a remote region if local access if not required.

- Route 53 and CloudFront can be used to reduce potential latency of a remote region.

- Managed Services

- Lavarage managed services such as MySql RDS over self-managed options such as MySQL on EC2.

- Cost Saving gained through lower complexity and manual intervention.

- RDS,RedShift,Fargate, and EMR are great examples of fully-managed service that replace traditionally complex and difficult installations with push-button ease.

- Optimized Data Transfer

- Data going out and between AWS regions can become significant cost components.

- Direct Connect can be a more cost-effective option given data volume and speed.

- Resources Groups are grouping of AWS assets defined by tags.

- Create custom consoles to consolidate metrics, alarms, and config details around given tags.

- Common Resources Groupings:

- Environments (DEV,QA,PRD)

- Project Resources

- Collection of resources supporting key business process

- Resources allocated to various departments or cost centres.

- Purchases usage of EC2 instance advance for a significant discount over On-Demand pricing.

- Provides capacity reservation when used in a specific AZ.

- AWS Billing automatically applies discounts rates when you launch an instances that matches your purchases RI.

- EC2 has three RI types: Standards, Convertible, and Scheduled.

- Can be shared across multiple accounts within Consolidate Billing.

- If you find you don’t need your RI’s, you can try to sell them on the Reserved instances Marketplace.

- Instances Types - designates CPU, memory, networking capability.

- Plataform- linux, SUSE linux, RHEL, Windows, SQL Server

- Tenancy- Default tenancy or Dedicate tenancy

- Availability Zone - If AZ is selected, RI is reserved and discount applies to that AZ (Zonal RI). If no AZ is specified, no reservation is create but the discount is applied to any instances in the family in any AZ in the region (Regional RI)

- Excess EC2 capacity that AWS tires to sell on an markets exchanges basis.

- Customer create a Spot Request and specific AMI, desired instances types, and other key information.

- Customer defines highest price willing to pay for instances. If capacity is constrained and other are willing ti pay more, your instances might get terminated or stopped.

- Spot request can be a fill and kill, maintain or duration-based .

- For one-time-request, instances is terminated and ephemeral data lost.

- For Request and maintain, instance can be configured to terminate, stop or hibernate until price point can be met again.

- Virtualized instances on hardware just for you

- May share hardware with other non-dedicate instances in the same account.

- Available as On-Demand, Reserved Instances, and Spot Instances.

- Cost additional $2 per hour per region

- Physical servers dedicate to just your use.

- You the have control over which instances are deployed on that host.

- Available as On-Demand or with Dedicate Host Reservation.

- Useful if you have server bound software licences that use metrics like per-core, per-socket or per VM

- Each dedicate host can only run on EC2 instance size and type.

- Allow you to set pre-defined limits and notifications if nearing a budget to exceeding the budget.

- Can be based on Cost, Usage, Reserved Instances Utilization or Reserved Instances Coverage.

- Useful as method to distributed cost and Usage awareness and responsibility to platform users.

- Runs a series of checks on your resources and proposes suggest improvements.

- Can help recommend cost optimization adjustment like reserved instances or scaling adjustment

- Core checks are available to all customers

- Full Trusted Advisor benefits require a Business or Enterprise supports plan.

- Adopt a consumption model: Pay only for the computing resources you consume, and increase or decrease usage depending on business requirements—not with elaborate forecasting.

- Measure overall efficiency: Measure the business output of the system and the costs associated with delivering it.

- Stop spending money on data center operations: AWS does the heavy lifting of racking, stacking, and powering servers, so you can focus on your customers and business projects rather than on IT infrastructure.

- Analyze and attribute expenditure: The cloud makes it easier to accurately identify the usage and cost of systems, which then allows transparent attribution of IT costs to revenue streams and individual business owners.

- Use managed services to reduce cost of ownership: In the cloud, managed services remove the operational burden of maintaining servers for tasks like sending email or managing databases.

- Cost-effective resources

- Matching supply with demand

- Expenditure awareness

- Optimizing over time

- Appropriate provisioning

- Right sizing

- Purchasing options: On Demand Instances, Spot Instances, and Reserved Instances

- Geographic selection

- Managed services

- Optimize data transfer

- The monitoring must accurately reflect the end-user experience.

- Select the correct granularity for the time period of analysis that is required to cover any system cycles.

- Assess the cost of modification against the benefit of right sizing

- A few big purchase decisions are made by a few people every few years.

- Typically overprovisioned as a result of planning up front for spikes in usage.

- Decentralized spending power.

- Small decisions made by a lot of people.

- Resources are spun up and down as new services are designed and then decommissioned.

- Cost ramifications felt by the organization as a whole are closely monitored and tracked.

- Labor. How much do you spend on maintaining your environment?

- Network. How much bandwidth do you need? What is your bandwidth peak to average ratio? What are you assuming for network gear? What if you need to scale beyond a single rack?

- Capacity. How do you plan for capacity? What is the cost of over- provisioning for peak capacity? What if you need less capacity? Anticipating next year?

- Availability/Power. Do you have a disaster recovery (DR) facility? What was your power utility bill for your data centers last year? Have you budgeted for both average and peak power requirements? Do you have separate costs for cooling/ HVAC? Are you accounting for 2N (parallel redundancy) power? If not, what happens when you have a power issue to your rack?

- Servers. What is your average server utilization? How much do you overprovision for peak load? What is the cost of over-provisioning?

- Space. Will you run out of data center space? When is your lease up?

AWS Tagging

Most resources can have up to 50 tags.

AWS Resources Groups

Spot and Reserved Instances

Reserved instances:

| Standart | Convertible | |

| Terms | 1 year,3 years | 1 year, 3 years |

| Average Discount off On-Demand | 40% - 60% | 31% - 54% |

| Change AZ , instances Size, NetWorking Type | Yes via Modify Reserved instances API or Console | Yes via Exchange Reserved Instances API or Console |

| Change instances family,OS, Tenancy, Payment Options | No | Yes |

| Benefits from Price Reductions | No | Yes |

| Sellable on Reserved instances Marketplace | Yes (Sale proceeds must be deposited in US bank account) | Not yet |

RI Attributes

Spot instances:

Dedicate instances and Hosts:

Dedicate instances

Dedicate Host

AWS Budget

Trust Advisor

Cost Optimization

Design Principles

Keep these design principles in mind as we discuss best practices for cost optimization:

Cost optimization in the cloud is composed of four areas:

Cost-Effective Resources

Using the appropriate services, resources, and configurations for your workloads is key to cost savings. In AWS there are a number of different approaches:

Keep in mind three key considerations when you perform right-sizing exercises:

The following table contrasts the traditional funding model against the cloud funding model.

| Funding Model | Characteristics |

Traditional Data Center

| |

| Cloud |

Start with an Understanding of Current Costs

Evaluate the following when calculating your on-premises computing costs:

References: