I did and I used this summary with some bullets and some parts of text extracted from AWS papers and from come online courses like cloud guru, linux academy, udemy. To study concepts about Data Store.

I hope it could be useful to someone.

Data store

- Persistence data store: Durable database after restart( RDS,Glacier )

- Transient Data store: temporary data that will be passed to another database or process (SQS, SNS)

- Ephemeral Data Store: Data can be lost with stop (EC2 instance store, Memcached)

IOPS vs Throughput

- IOPS : (input / output operations per second) measures how fast reading or writing

- Throughput: measures the amount of data that can be moved over a period of time

Consistency is far better than rare moments of greatness.

Availability is considered a higher priority for the BASE model but not the ACID model which values consistency over availability. Lazy writes are typical of eventual consistency rather than perpetual consistency

Availability is considered a higher priority for the BASE model but not the ACID model which values consistency over availability. Lazy writes are typical of eventual consistency rather than perpetual consistency

ACID

- Atomic -> transaction are all or nothing

- Consistence -> transaction must be valid

- Isolated -> Transaction can't be mess with one another

- Durable -> completed transaction must stick around

BASE

- Basic Availability -> values availability even if stale

- Soft State -> might not be instantly consistent across stores

- Eventual Consistency -> will achieve consistency at some point

DataBase Options:

Database on ec2: ultimate control over database, preferred DB not available on RDS

RDS: traditional database for OLTP, data is well structured ACID complyant

DynamoDB: Name/value or unpredictable data structure, in-memory performance with persistence, scale dynamically

Redshift: massive amount of data, primarily OLAP workloads

Neptune: relationships between objects a major portion of data values

Elasticache: Fast temporary storage for small amounts of data

Saas partitioning models:

- Silo: separate database for each tenant

- Bridge: single database, multiple schemas

- Pool: Shared database, single schema

Bridge Model:

RDS:

Managed database option for mysql, Maria, postgreSQL,Sql server, oracle, aurora

Best for structured, relational data store needs.

Aims to be drop-in replacement for existing on-prem instance of same databases

Automated backup and patching in customer-defined maintenance windows.

Push button scaling, replication and redundancy.

Multi-AZ

Read replicas service regional users

For Mysql, non-transnational storage engine like MyISAM don’t support replication, you must use InnoDB or XtraBD on Maria

MariaDB is an open source fork of mysql

RDS Anti-patterns:

- Lots of large binary object like BLOBs- user S3

- Automated scalability - use dynamoDB

- Name/Value Data Structure - use dynamoDB

- Data is not well structured or unpredictable - - use dynamoDB

- Other database platform like, DB2, Hana or complete control over the database -> ec2

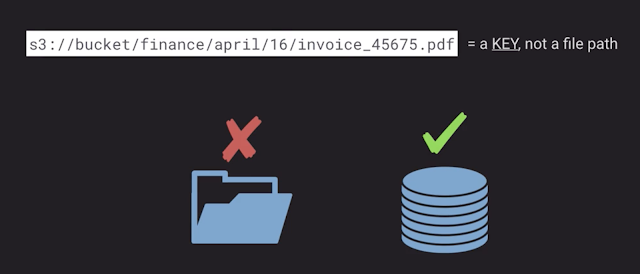

S3

The maximum object at s3 can be 5TB

The biggest object on a put can be 5GB

It is recommended to use multi-part upload if it is greater than 100GB

The filename can be considered a key not exactly a path

Amazon S3 is designed for 99.999999999 percent (11 nines) durability per object

Consistency:

S3 prove read-after-write consistency for PUTs in new objects

Head or GET requests for keys before the object exists will return in eventual consistency

S3 offers eventual consistency for overwrite PUTs and DELETES

Update on a single key are atomic

If you make a HEAD or GET request for the S3 key name before creating the object, S3 provides eventual consistency for read-after-write. As a result, we will get a 404 Not Found error until the upload is fully replicated.

If you make a HEAD or GET request for the S3 key name before creating the object, S3 provides eventual consistency for read-after-write. As a result, we will get a 404 Not Found error until the upload is fully replicated.

Security:

- Resource-based->object ACL, Bucket policy

- User-based -> IAM policy

- Optional Multi-factor Authentication before delete

Data protection

New version with each write

Enable roll-back and un-delete capabilities

Old version count as billable size until they are permanently deleted.

Integrated with lifecycle management

Cross-Region Replication

LifecycleManagement

Optimize storage cost

Adhere to data retention policies

Keep S3 volumes well maintained

Analytics

Data lake concepts -> with Athena, Redshift Spectrum, QuickSigth

IoT Streaming data Repository -> Kinesis Firehose

Machine Learning and AI Storage -> Rekognition, Lex, MXNet

Storage Class Analysis -> s3 management Analytics

Encryption at Rest

- SSE-S3 -> Use s3 existing encryption keys for AES-256

- SSE-C -> Upload your own AES-256 encryption key which s3 will use when it writes the object

- SSE-KMS -> Use a key generated and managed by AWS key management Service

- Client-side -> Encrypt object using you own local encryption process before uploading S3

Tricks:

- Transfer Acceleration -> Speed up data uploads using cloudFront in reverse

- Requester pay -> the requester rather the bucket owner pays for requests and data transfer

- Tags -> Assing tags to object for use in costing, billing, security.

- Events -> trigger notification to SNS, SQS or lambda when certain events happen in you bucket

- Satic web hosting -> simple and massively scalable static website hosting

- BitTorrent -> use the bitTorrent protocol to retrieve any publicly available object by automatically generating a.torrent file

Glacier

Cheap, slow to respond, seldom accessed

Cold storage

Used by AWS Storage Gateway Virtual tape library

Integrated with aws s3 via lifecycle management

Faster retrieval speed options if you pay more

Amazon Glacier is designed to provide average annual durability of 99.999999999 percent (11 nines) for an archive.

Glacier Vault Lock is an immutable way to set policies on a Glacier vault such as retention or enforcing MFA before delete.

Glacier Vault Lock is an immutable way to set policies on a Glacier vault such as retention or enforcing MFA before delete.

S3 for blobs

EFS: service provides scalable network file storage for Ec2 instances

EBS: service provide block storage volumes for ec2

Ec2 instance storage: temporary block storage volumes for ec2

Storage gateway: an on-premises storage appliance that integrate with cloud storage

Snowball: service transport large amount of data to and from cloud

Amazon S3 is designed for 99.999999999 percent (11 nines) durability and 99.99 percent availability

Each AWS Snowball appliance is capable of storing 50 TB or 80 TB of data

For Amazon S3, individual files are loaded as objects and can range up to 5 TB in size

CloudFront: service provide a global content delivery network

Amazon CloudFront is designed for low-latency and high-bandwidth delivery of content

CDN is an edge cache, Amazon CloudFront does not provide durable storage. The origin server, such as Amazon S3 or a web server running on Amazon EC2

Amazon s3 offers a range of storage classes desired for deferents use cases including:

S3 standard: for general propose storage of frequently accessed data .

S3 standard infrequent access (Standard-IA): for long lived, but less frequently accessed data

Glacier: for low cost archival data, retrieval jobs typically complete in 3 to 5 hours, you can improve the upload experience for large archives by using multipart upload for archives up to about 40 TB (the single archive limit)

S3 Usage Patterns:

- First: used to store and distributed static web content and media

- Second: used to host entire static websites

- Third: used as a data store for computation and large-scale analytics, such as financial transactional analysis, clickstream analytics, and media transcoding.

Elastic Block Storage

Virtual hard drives

Can only be user with ec2

Tied to a single AZ

Variety of optimised choices for IOPS, Throughput and cost

Temporary

Ideal for caches, buffers, works areas

Data goes away when ec2 is stopped or terminated

Cost effective and easy backups strategy

Share data set with other user or account

Migrate a system to a new AZ or Region

Amazon EBS provides a range of volume types that are divided into two major categories: SSD-backed storage volumes and HDD-backed storage volumes.

Volume types:

- SSD-Backed Provisioned IOPS (io1): I/O-intensive NoSQL and relational databases

- SSD-Backed General Purpose (gp2) : Boot volumes, low-latency interactive apps, dev & test

- HDD-Backed Throughput Optimized (st1) : Big data, data warehouse, log processing

- HDD-Backed Cold (sc1) : Colder data requiring fewer scans per day

Elastic File Server

Implementation of NFS file share

Elastic storage capacity, and pay for only what you see

Multi-AZ metadata and data storage

Configure mount points in one, or many, AZs.

Can be mounted from on-premises system ONLY if using direct connect

Alternative, use EFS file sync agent

if your overall Amazon EFS workload will exceed 7,000 file operations per second per file system, we recommend the files system use Max I/O performance mode.

IAM permissions for API calls; security groups for EC2 instances and mount targets; and Network File System-level users, groups, and permissions.

Storage gateway

Virtual machine that you run on-premises with VMWare or HyperV

Provides local storage resources backed by S3 and glacier

Ofter used in disaster recovery preparedness to sync to aws

Useful in cloud migration

File gateway: Allow on-prem or Ec2 instance to object in s3 via NFS or SMB mount point

Volume Gateway store mode: async replication of on-prem data to s3

Volume gateway cached mode: primary data stored in s3 with frequently access data cached locally on-prem

Tape Gateway: virtual media changer and tape library for use with existing backup software

WorkDocs

Secure, fully managed file collaboration service

Can integrate with AD for SSO

Web, mobile and native clientes (no linux client)

HIPPA, PCI DSS and ISO compliance requirements

Available SDK for creating complementary apps

Database on Ec2:

Run any database with full control and ultimate flexibility.

Must manage everything like backup, redundancy, patching, scale

Good option with your require a database not yet supported by RDS, such as IBM DB2, or SAP Hana

Good option if it is not feasible to migrated to aws managed database

AWS offers two EC2 instance families that are purposely built for storage-centric workloads:

-SSD-Backed Storage-Optimized (i2):NoSQL databases, like Cassandra and MongoDB, scale out transactional databases, data warehousing, Hadoop, and cluster file systems.

-HDD-Backed Dense-Storage (d2): Massively Parallel Processing (MPP) data warehousing, MapReduce and Hadoop distributed computing, distributed file systems, network file systems, log or data-processing applications.

DynamoDB

Managed, multi-az NoSQL data store with cross-region Replication option

Defaults to eventual consistency reads but can request strongly consistency read via SDK parameter

Priced on throughput, rather than computer

Provision read and write capacity in anticipation of need

Autoscale capacity adjusts per configured min/max levels

On-demand capacity for flexible capacity at a small premium cost

Achieve ACID compliance with DynamoDB transactions

Secondary index

Global Secondary index

-> Partition key and sort key can be different from those on the table

-> User when you want a fast query of attributes outside the primary key without having to do a table scan (read everything sequentially )

Local Secondary index

-> Same partition key as the table but different sort key

-> Use when you already know the partition key and want to quickly query on some other attributes

Max 5 local and 5 global secondary indexes

Max 20 attributes across all indexes

Indexes take up storage space

Example:

Suppose we created a Global secondary index using customerNum, we could query by this fields with light-speed

If you need to:

-> access just a few attributes the fastest way possible, Consider projecting just those few attributes in a global secondary index, Benefits: lowest possible latency access for non-key items.

-> frequently access some non-key attributes , Consider projecting those attributes in a global secondary index, Benefits: lowest possible latency access for non-key items.

-> frequently access most non-key attributes , Consider projecting those attributes or even the entire table in a global secondary index, Benefits: maximum flexibility

-> rarely query but write or update frequently, Consider projecting keys only for the global secondary index, Benefits: very fast write or updates for non-partition keys itens

Redshift:

Fully managed, clustered peta-byte scale data warehouse

Extremely cost-effective as comparable to some other on-premises data warehouse platform

PostgresSQL compatible with JDBC and ODBC drivers available compatible with most BI tools out of the box

Features parallel processing and columnar data store which are optimised for complex queries

Option to query directly from data file on s3 via Redshift spectrum

Multitenancy on Amazon Redshift

Amazon Redshift also places some limits on the constructs that you can create within each cluster. Consider the following limits:

* 60 databases per cluster

* 256 schemas per database

* 500 concurrent connections per database

* 50 concurrent queries

* Access to a cluster enables access to all databases in the cluster

Datalake:

Query raw data without extensive per-processing

Lessen time from data collection to data value

Identify correlations between disparate data sets

Neptune:

Fully-managed graphs database

Supports open graphs APIs for both Gremlin and SPARQL

ElasticCache

Fully-managed implemented of two popular in-memory data store - Redis and Memcached

Push button scalability for memory, writes and reads

In memory key/value store - not persistent in the traditional sense

Billed by node size and hours of use

Web Session store - in case with load balance web servers, store web session information in redis so if a server is lost, the session info is not lost and another web server can pick-up

Database caching -use memcached in front of AWS RDS to cached popular queries to offload work from RDS and return results faster to users.

Leaderboards- use redis to provide a live leaderboard from millions of users of your mobile app.

Streaming Data Dashboards-provide a landing spot of streaming sensor data on the factory floor, providing live real-time dashboard displays.

Memcahed: If you need cached like a queries database

Redis

If you need encryption, HIPPA compliance, suporte clustering.

High availability

Pub/sub capability

Geo spacial indexing

Backup and restore

Alternatives to ElastiCache:

- Amazon CloudFront content delivery network (CDN)—this approach is used to cache web pages, image assets, videos, and other static data at the edge, as close to end users as possible

- Amazon RDS Read Replicas—some database engines, such as MySQL, supportthe ability to attach asynchronous read replicas.

- On-host caching—a simplistic approach to caching is to store data on each Amazon EC2 application instance, so that it's local to the server for fast lookup

When deciding between Memcached and Redis, here are a few questions to consider:

- Is object caching your primary goal, for example to offload your database? If so, use Memcached.

- Are you interested in as simple a caching model as possible? If so, use Memcached.

- Are you planning on running large cache nodes, and require multithreaded performance with utilization of multiple cores? If so, use Memcached.

- Do you want the ability to scale your cache horizontally as you grow? If so, use Memcached.

- Does your app need to atomically increment or decrement counters? If so, use either Redis or Memcached.

- Are you looking for more advanced data types, such as lists, hashes, and sets? If so, use Redis.

- Does sorting and ranking datasets in memory help you, such as with leaderboards? If so, use Redis.

- Are publish and subscribe (pub/sub) capabilities of use to your application? If so, use Redis.

- Is persistence of your key store important? If so, useRedis.

- Do you want to run in multiple AWS Availability Zones (Multi-AZ) with failover? If so, use Redis.

References:

- https://d0.awsstatic.com/whitepapers/performance-at-scale-with-amazon-elasticache.pdf

- https://www.youtube.com/watch?v=SUWqDOnXeDw

Nenhum comentário:

Postar um comentário

Observação: somente um membro deste blog pode postar um comentário.